A. Overview of the SPEC Token

At Spectral, we are pioneering the Onchain Agent Economy with Syntax, enabling users to create and operate powerful onchain agents that transform how they interact within Web3. Through natural language conversations, users can delegate complex tasks to their agents, streamlining their onchain activities. To accelerate the adoption of agentic technology, we’re implementing a robust tokenomics model designed to foster organic network effects and incentivize participation on the SYNTAX network. These incentives are intricately aligned with our long-term vision, ensuring sustainable growth and engagement within a thriving ecosystem of Onchain Agents.

Central to this vision is the SPEC token, an ERC20 governance token that empowers community stakeholders to actively participate in the network's decision-making processes. SPEC enables users building agents on Spectral Syntax and the Inferchain to propose and vote on platform upgrades, modifications, and parameter adjustments, ensuring a decentralized and inclusive approach to governance. Beyond governance, SPEC serves as a utility and value exchange mechanism, integral to the monetization and operation of agents within the network.

B. Incentive and Utility Mechanism

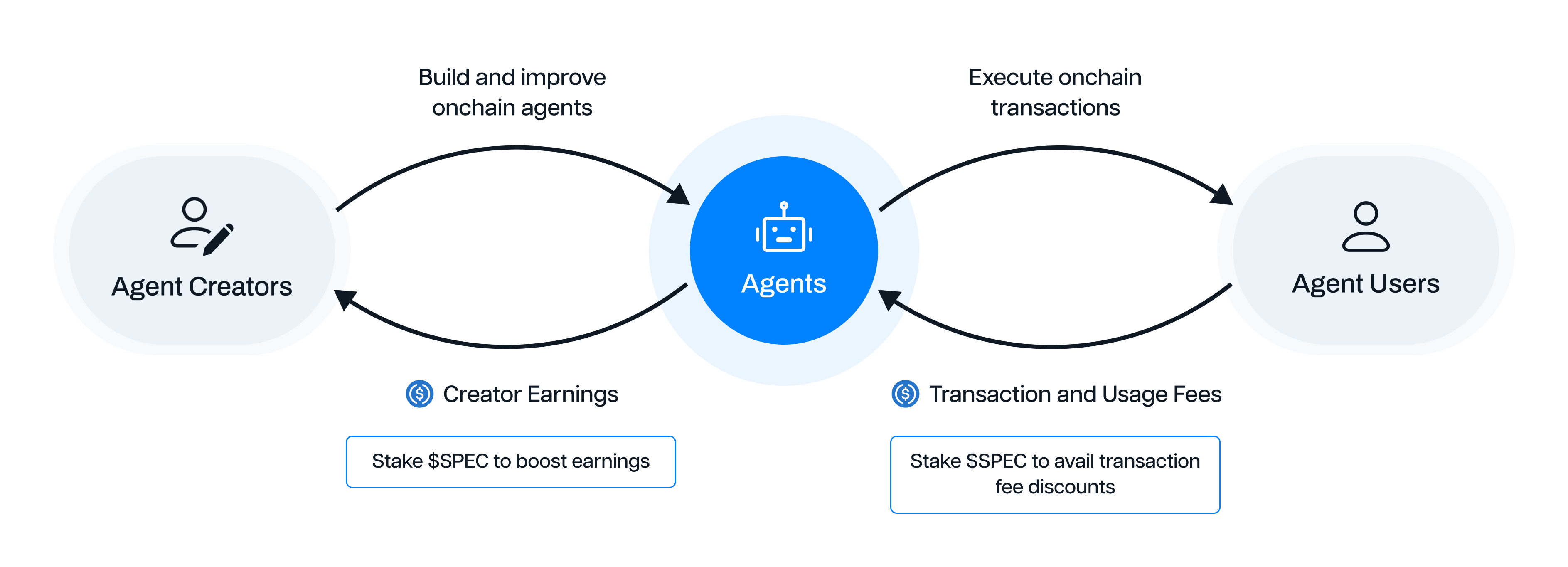

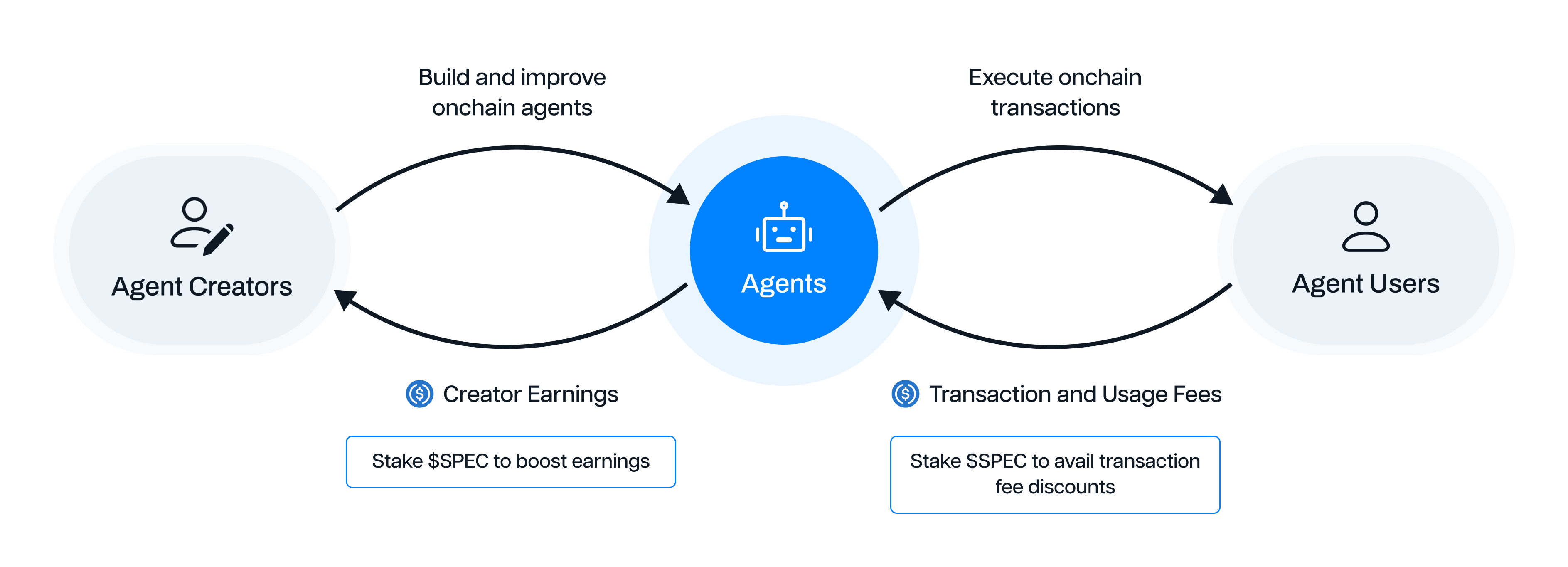

The Syntax network is a two-sided marketplace between Creators, Users and Agents. Creators create the Agents and publish them on the Syntax network. Creators can use Syntax’s AgentBuilder to build agents through natural language instructions (note that creators can also be Users of other Agents on the network). Users initiate tasks (jobs) for the AI agents available on the Syntax network. Agents understand the request from their users, then compose an execution code (python script) that allows them to perform the actions autonomously over a user-specified timeframe.

These three actors come together to create a vibrant network effect on Syntax that in turn reinforces the incentive mechanisms that enable these actors to accelerate agent adoption. Here’s how this mechanism works:

Diagram: Incentive and Utility Mechanism on Spectral Syntax Network

- User-Agent Interactions: Users pay Transaction Fees and Usage Fees for using the Agents on the Syntax network, and Agents utilize these funds to facilitate the execution of jobs created by the user.

- Creator-Agent Interactions: Agent Creators on the Syntax network receive ongoing Creator Earnings, defined for every transaction done by their agent while serving users, as a percentage of the transaction value. More efficient, powerful agents are likely to secure more users and conduct more transactions, and therefore a Creator is incentivized to keep improving their agents to serve more users.

C. Staking Benefits

Spectral’s Governance token, SPEC, is employed in our tokenomics as the self-sustaining mechanism for value distribution across the network as follows:

- Users can stake SPEC to receive a discount on their transaction fees. This mechanism allows users to secure increasing yield as their engagement in agentic trading and other onchain activities increases.

- Agent Creators can stake SPEC to gain higher percentages of creator earnings against every transaction made by their agent. This incentive allows creators to invest efforts continuously into improving the technical capabilities of their onchain agents. The tokens staked by the creator derive more yield as a creator’s agent serves more users.

To further encourage adoption of the agentic paradigm, we may bring forward governance proposals for enhanced incentive mechanisms that improve utility for SPEC community members. Such proposals will aim to encourage Agent Creators to invest time and effort in the continuous improvement of their agents, thus amplifying the overall value created on the Syntax network.

D. Governance

SPEC token serves as a powerful mechanism for actors in the system to actively participate in the governance of the Decentralized Autonomous Organization.

Voting on Network Improvement Proposals: SPEC token holders can actively participate in the governance process by submitting and voting on proposals related to network upgrades, feature implementations, fee structures, and standardized challenge rules. While proposing proposals and voting on them, the DAO shall follow the following guidelines:

Voting power is proportional to the amount of SPEC held by a user.

There is a designated voting period for each proposal.

Token holders express support or opposition during the voting period.

Votes can be cast directly or delegated to other addresses.

Delegating voting power promotes broader participation.

A proposal must meet a minimum quorum requirement to be valid.

Approval requirement is typically 40% of total votes for a successful proposal.

If a proposal meets both the quorum and approval requirements, it is considered successful. The proposed changes are then implemented in the protocol according to the terms outlined in the proposal.

- Influencing Governance Parameters: Token holders have the ability to propose and vote on adjustments to governance parameters, including voting rules, quorum thresholds, and voting periods, contributing to the agility and responsiveness of the platform's governance model.

- Staking for Agent Operations: On Spectral Syntax, staking gives users privileged access for monetizing the Agent network. To that end, SPEC holders can use their tokens to influence the manners in which Spectral monetizes the Agent network, and propose platform fee changes, suggest strategic partnerships, modify content moderation rules, etc.

- Participating in Smart Contract Upgrades: Through DAO governance, SPEC token holders can vote on proposed changes to Spectral's smart contracts. This allows for the platform's flexibility and adaptability to emerging technologies and security enhancements.

- Allocating Funds for Community Initiatives: The DAO may allocate a portion of the platform's funds to support community-driven initiatives, research and development, marketing efforts, or partnerships. This mechanism empowers the community to drive initiatives that benefit the platform as a whole.

- Shaping the Future of Spectral: Overall, holding SPEC tokens grants individuals the opportunity to actively shape the future of the Spectral Network by participating in various governance activities, ensuring a transparent and inclusive decision-making process.